Artificial Intelligence (AI) has been causing a paradigm shift in various sectors, including Livestock. A noteworthy application in this area is the hens monitoring and assessment system. Using AI, especially deep learning (DL) models, makes the process more efficient and accurate. In this blog, we will explore how the latest advancements in deep learning can be used to monitor and assess hens using YOLOv8, a state-of-the-art object detection system.

1. AI in Livestock monitoring and management

AI in livestock monitoring and management refers to the application of artificial intelligence and related technologies to improve the care, health, and productivity of livestock animals such as cattle, poultry, pigs, and sheep. This technology-driven approach offers several advantages for livestock farming, including increased efficiency, reduced labor, better animal welfare, and enhanced overall profitability. Here are some key aspects and applications of AI in livestock monitoring and management:

1.1. Livestock Monitoring:

Tech solutions employ various sensors and wearable devices to monitor the health and well-being of individual animals or entire herds. These devices can track parameters such as body temperature, heart rate, activity levels, and even GPS location. Real-time data is collected and transmitted to a central system, allowing farmers to detect signs of illness or distress early, improve breeding programs, and enhance overall animal welfare.

1.2. Nutrition Management:

Tools assist farmers in optimizing livestock nutrition. Smart feeders and automated feeding systems can provide precise portions of feed tailored to the dietary needs of each animal. These systems can adjust feeding schedules and rations based on factors like age, weight, and health conditions, reducing feed wastage and improving animal growth rates.

1.3. Animal Behavior Analysis:

Machine learning algorithms and computer vision technologies enable the analysis of animal behavior. Cameras and sensors installed in barns or pastures can track how animals interact, move, and graze. This data can help identify signs of stress, disease outbreaks, or deviations from normal behavior patterns.

1.4. Health Monitoring:

AgTech solutions facilitate remote health monitoring of livestock. Diagnostic tools, such as smart ear tags or blood analysis devices, can be used to detect diseases or health issues early. Data from these devices can be integrated with AI-driven systems to provide predictive analytics for disease outbreaks, enabling timely interventions and reducing treatment costs.

1.5. Reproductive Management:

Advanced reproductive technologies, including artificial insemination (AI) and embryo transfer, are enhanced by AgriTech. AI tools use data analytics to optimize breeding decisions, increasing the chances of successful pregnancies and improving genetics within a herd.

1.6. Livestock Tracking:

GPS and RFID (Radio-Frequency Identification) technologies are used to track the location and movement of livestock. This is especially valuable for managing extensive grazing systems and preventing theft or loss of animals.

1.7. Environmental Monitoring:

Livestock farming can have environmental impacts. AgriTech solutions can monitor factors such as water quality, emissions, and waste management to ensure compliance with environmental regulations and promote sustainable practices.

1.8. Market Access and Traceability:

Blockchain technology is increasingly used in AgriTech to establish transparent supply chains for livestock products. Consumers can trace the origin and quality of meat, milk, or eggs back to the source farm, fostering trust and ensuring food safety.

1.9. Data Analytics:

AgTech platforms collect vast amounts of data from various sources. Advanced analytics and AI-driven models can turn this data into actionable insights. Farmers can make informed decisions about breeding, feeding, and overall herd management based on data-driven recommendations.

1.10. Labor Efficiency:

AgTech automation reduces manual labor requirements on farms. Robots and automated systems can handle tasks like milking, cleaning, and feeding, freeing up farmworkers to focus on higher-value activities and improving overall labor efficiency.

In summary, AgTech in livestock farming offers numerous benefits, including improved animal welfare, increased productivity, enhanced disease management, and more sustainable practices. These technologies are helping modernize the livestock sector, making it more efficient and environmentally responsible.

2. Health Monitoring of Chickens

Health monitoring of chickens through movement analysis using chicken videos involves tracking and analyzing the behavior, posture, and activity of chickens to detect signs of illness, stress, or other health issues. Here are some key tasks and techniques that can be applied in this context:

2.1. Behavior Analysis:

- Activity Levels: Analyze the overall activity levels of chickens. Reduced activity, lethargy, or abnormal movement patterns can be indicative of health problems.

- Feeding and Drinking Behavior: Monitor feeding and drinking behavior. Changes in appetite or water consumption may signal issues.

- Social Interactions: Observe interactions between chickens. Aggressive behavior or isolation from the flock can be signs of stress or illness.

2.2. Posture and Gait Analysis:

- Posture Recognition: Detect abnormal postures, such as hunching, limping, or abnormal head positioning, which may indicate discomfort or pain.

- Gait Analysis: Track the walking patterns of chickens. Irregular gait or lameness may suggest leg or joint issues.

2.3. Feather Condition and Plumage Analysis:

- Feather Ruffling: Identify instances of excessive feather ruffling, which can be a sign of discomfort or illness.

- Feather Loss: Monitor and quantify feather loss or damage, which may be due to feather pecking or parasitic infestations.

2.4. Vocalization Analysis:

- Sound Monitoring: Record and analyze chicken vocalizations. Changes in vocalization patterns can indicate distress or discomfort.

2.5. Thermal Imaging:

- Infrared Cameras: Use thermal imaging cameras to detect variations in body temperature. Elevated temperatures may suggest fever or infection.

2.6. Machine Learning and Computer Vision:

- Anomaly Detection: Train machine learning models to detect anomalies in chicken behavior or movement. These models can flag unusual patterns for further investigation.

- Deep Learning: Utilize deep learning techniques for object recognition and tracking to identify individual chickens and monitor their behavior over time.

- Activity Classification: Develop models to classify chicken activities such as feeding, drinking, preening, or resting.

2.7. Remote Monitoring:

- Video Surveillance: Install cameras in chicken coops or areas where chickens are housed to continuously monitor their behavior.

- Real-Time Alerts: Implement real-time alerting systems that notify farmers or caretakers of any abnormal behavior or health-related issues.

Health monitoring of chickens through movement analysis via chicken videos provides a non-invasive and continuous method for assessing the well-being of poultry. By leveraging advanced technologies, poultry farmers can detect health issues early, improve the overall welfare of their flocks, and make informed decisions regarding treatment or intervention when necessary.

3. Chicken Detection

You Only Look Once (YOLO) is a real-time object detection system that identifies objects in a single forward propagation through the network. Unlike traditional object detectors that classify regions of interest, YOLO applies a single convolutional neural network (NN) to the image. This approach makes YOLO incredibly fast and accurate, especially for real-time object detection tasks. Since its inception, YOLO has gone through several iterations, each introducing more accuracy and speed. YOLOv8, the latest version as of this blog, brings additional improvements, making it an excellent choice for tasks like monitoring and assessing hens.

3.1. Understanding of YOLOv8

YOLOv8 incorporates several modifications over its predecessors to deliver faster and more accurate object detection. The introduction of Transformer architectures, a class of models that use self-attention mechanisms, provides the model with a better understanding of the context. YOLOv8 also enhances object detection’s scale invariance by adopting an anchor-free prediction method, eliminating the need for predefined anchor boxes. It also leverages the CSPDarknet53 backbone architecture for feature extraction, which strikes a balance between computational efficiency and detection accuracy.

3.2. Applying YOLOv8 for Hens Monitoring and Assessment

Before applying the YOLOv8 model for hen sorting and assessment requires a systematic approach to data collection, annotation, and pre-processing. Here is a detailed look at these steps:

3.2.1. Dataset Preparation

To use YOLOv8 for hens monitoring, the first step is to prepare a suitable dataset of hen images. Images should include hens in various positions and conditions, taken from different angles and lighting. Each image should be labeled with bounding boxes around the hens and annotated with any other necessary information (like hen’s health condition). The better and more diverse your dataset, the better your model will perform.

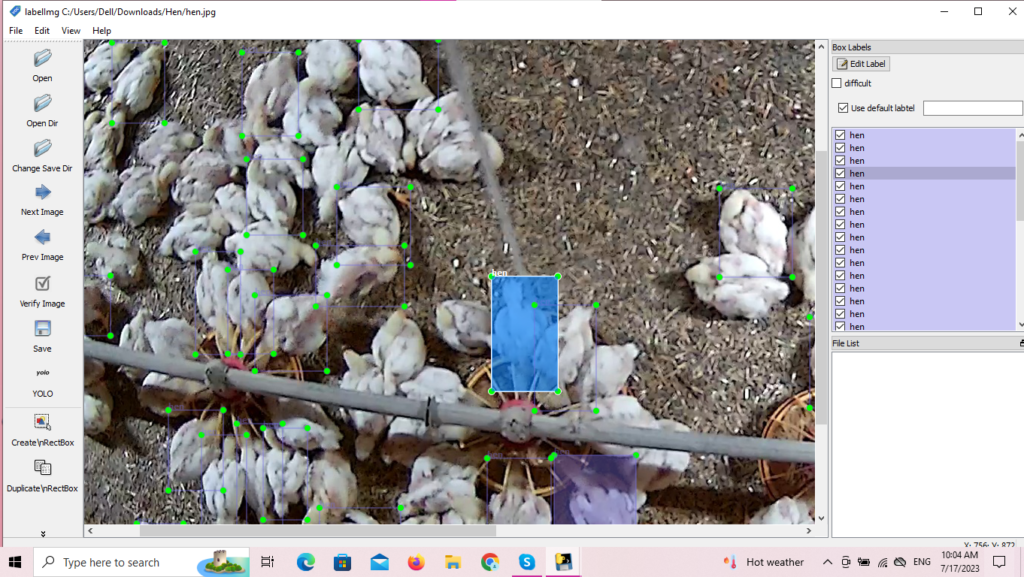

Figure 1: Dataset Preparation of Hens

3.2.2. Hens Data-preparation with YoloV8Data Pre-processing

Data pre-processing involves preparing the images and annotations for training the YOLOv8 model. The images and bounding box coordinates need to be normalized, typically between 0 and 1, which helps the model to converge faster. The image size should be consistent, as YOLOv8 requires fixed-size input. If images are of different sizes, they need to be resized. When resizing, ensure the aspect ratio is kept constant to avoid distortions. It’s also common to apply data augmentation techniques such as rotation, flipping, translation, etc., to increase the size of the dataset and add more variability, which can help to improve model performance.

3.2.3. Data Collection

The first step in training Yolov8 for hen monitoring is data collection. High-quality images of various fruits in different states are needed. This may include images featuring hens in various conditions, positions, angles, lighting conditions, and backgrounds. In this blog, we will be used images of different fruits taken from an open-source repository.

3.2.4. Annotation

Once the dataset is collected, each image must be annotated. For YOLOv8, the annotation should include bounding boxes around each hen in the image and a class label indicating the hen’s condition or type if the sorting is based on certain attributes. The bounding box should include the (x, y) coordinates of the top-left corner and the width and height of the box. Annotation can be a time-consuming process but there are various tools available like LabelImg, VGG Image Annotator (VIA), and others that can expedite this task. For this blog, we used LabelImg for annotating the images.

The annotations, usually in the form of CSV or XML files, need to be converted into a format that YOLOv8 can understand. YOLOv8 typically uses text files where each line describes one bounding box in the format [class, x_center, y_center, width, height]. The coordinates should be normalized relative to the image’s width and height By following these steps of data collection, annotation, and pre-processing, you can prepare a suitable dataset for training a YOLOv8 model for hens sorting and assessment.

Figure 2: Hens Data-preparation with YoloV8

3.3. Training the Model

Once the dataset is ready, the next step is to train the YOLOv8 model. Before starting training, the dataset should be split into a training set, validation set, and test set. The model is trained on the training set, tuned with the validation set, and finally evaluated on the test set. During training, the model learns to recognize the patterns that signify a hen in an image. YOLOv8’s end-to-end training process learns these patterns directly from the image pixels and bounding box labels, which makes it highly efficient and accurate.

3.4. Evaluating the Model

Once the YOLO8 model has been trained, now need to evaluate its performance using a separate test set that the model has not seen during training. Common metrics for evaluation include accuracy, precision, recall, and F1 score.

3.5. Real-time Hen Monitoring and Assessment

With a trained YOLOv8 model, you can set up a real-time hen monitoring and assessment system. This system could monitor the live feed from sensors installed in a pottery farm, detecting and classifying hens in real time. Any anomalies, like a hen’s unusual behavior, health condition, or missing hens, could be quickly identified and addressed.

3.6. Advantages and Challenges

YOLOv8 provides an efficient and effective solution for real-time hen monitoring. It offers high speed, accuracy, and the ability to detect hens in various conditions. However, there are also challenges, such as the need for a large, diverse, and well-labeled dataset and the computational resources required for training the model.

4. Conclusion

This blog presents the livestock monitoroting landscape and demonstrated that the employment of YOLOv8 provides an efficient, innovative, and scalable approach for chicekns’s monitoring and analysis. The model achieved impressive performance metrics, including high precision, recall, and F1 score, which are indicative of its robustness and reliability. YOLOv8’s efficient single-shot detection and high-resolution image processing capabilities were of particular advantage in the granular identification and tracking of hens in diverse and complex environments. By improving real-time object detection, this method effectively facilitates the observation of hen behavior, interactivity, and overall health status. Moreover, YOLOv8 was able to differentiate between various hens, despite their minor variations in physical attributes, thus demonstrating its capability for high-degree specificity.

However, the application of YOLOv8 in hens monitoring is not without challenges. The model’s performance is reliant on the quality and diversity of the training data. Hence, further enhancements in data acquisition, processing, and augmentation could lead to even more accurate results. In addition, while the model is excellent in standard situations, it may still struggle with edge cases, such as overlapping or partially obscured hens, which require additional computational efforts for resolution.